Creating better prompts, interrogating the outputs of these models, and regularly comparing the results to known facts are great ways to reduce the risk of using these LLMs in your practice. But there are other ways to improve the likelihood of correct and useful answers from generative AI, including selecting the right model to use in specific legal domains.

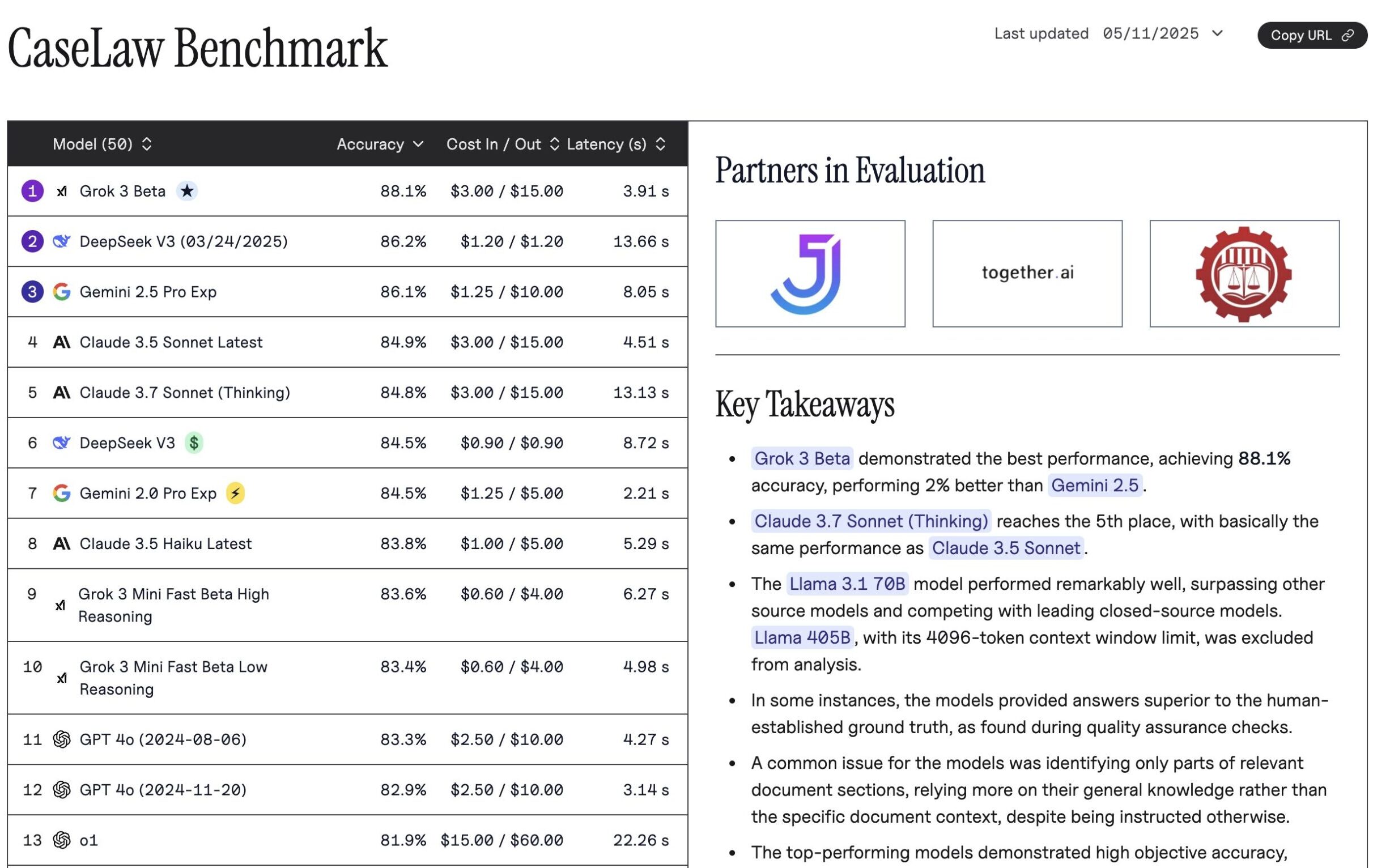

Recently it’s been shown that OpenAI is no longer the clear leader in legal generative AI. Just this week, Vals AI released their most recent legal benchmarking data, and showed some surprising results: Grok 3 Beta (88.1% accuracy) surpassed Google’s Gemini 2.5 Pro Exp (86.1% accuracy). These benchmarks measure how well the model’s results perform against known results that were submitted by legal professionals. Jurisage is one of Val AI’s partners to validate known case law datasets.

What can legal professionals learn from these benchmarking studies? Most of the recent conversations we’ve had with clients and others in the space have been about what data we include and what language we use to prompt these general purpose LLMs. There is an opportunity to add to the conversation about which models are being used. Not every LLM performs the same on legal case law datasets, not every LLM costs the same amount of money to prompt, and not every LLM generates the response at the same rate.

Why are benchmarks so important when it comes to generative AI in the legal space? Language matters in the legal profession. The quality of the LLM’s output determines the confidence legal professionals will have in the technology moving forward. We know AI tools are here to stay, but which ones to use and how to use them is still an open question. Companies like Vals AI are clarifying how this new technology performs by leveraging benchmarks — benchmarks that Jurisage is proud to help build and verify for the legal profession.

Full results from the Vals AI case law benchmarking study can be found here.